Sitecore doesn’t give multisite robots.txt out of the box and we need to build this functionality from scratch. In this article, we will learn How to Dynamically Create robots.txt for Multi-Site in Sitecore.

We need to create a custom processor which handles the text format and then we need to override the HttpRequestProcessor pipeline in Sitecore.

How to Dynamically Create robots.txt for Multi-Site in Sitecore

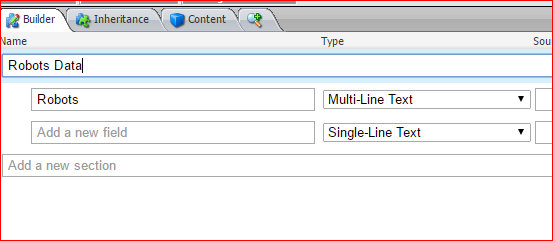

Step 1: Create a Robots Data Template. And add the field name Site Robots.

Step 2: We can set the default text in the standard values for the robots.txt file. For example, it’s not a good practice for search engines to crawl the Sitecore pages like ShowConfig.aspx etc. Hence we can add the below text into the standard value to block crawlers crawling the Sitecore files

User-agent: * Disallow: /sitecore

Step 3: Based on your content architecture inherit or create an item which is derived from Robots data template and add the text in the editor.

User-agent: * Disallow: Sitemap: http://www.example.com/none-standard-location/sitemap.xml

Step 4: Creating the custom HttpRequestProcessor

Create a class file and add the below code in the class file.

using Sitecore.Data.Items;

using Sitecore.Pipelines.HttpRequest;

using System;

using System.Collections.Generic;

using System.Linq;

using System.Text;

using System.Threading.Tasks;

using System.Web;

namespace Business.Services.Robots

{

public class RobotsProcessor : HttpRequestProcessor

{

public override void Process(HttpRequestArgs args)

{

HttpContext context = HttpContext.Current;

if (context == null)

{

return;

}

string requestUrl = context.Request.Url.ToString();

if (string.IsNullOrEmpty(requestUrl) || !requestUrl.ToLower().EndsWith("robots.txt"))

{

return;

}

string robotsTxtContent = @"User-agent: *"

+ Environment.NewLine +

"Disallow: /sitecore";

if (Sitecore.Context.Site != null && Sitecore.Context.Database != null)

{

Item homeNode = Sitecore.Context.Database.GetItem(Sitecore.Context.Site.StartPath);

if (homeNode != null)

{

if ((homeNode.Fields["Site Robots"] != null) &&

(!string.IsNullOrEmpty(homeNode.Fields["Site Robots"].Value)))

{

robotsTxtContent = homeNode.Fields["Site Robots"].Value;

}

}

}

context.Response.ContentType = "text/plain";

context.Response.Write(robotsTxtContent);

context.Response.End();

}

}

}The code is pretty straight forward. If the request URL ends with robots.txt then the request is intercepted and we will fetch the contents of robots.txt from the item and display it as plain text format. In case the field is left blank the default value which we have set to the standard value will be populated here.

Step 5: The final step is to patch up the config file. The Configuration file must allow the txt format file and we need to register the HttpRequestProcessor and map it to the service we created.

<?xml version="1.0" encoding="utf-8" ?>

<configuration xmlns:patch="http://www.sitecore.net/xmlconfig/">

<sitecore>

<pipelines>

<preprocessRequest>

<processor type="Sitecore.Pipelines.PreprocessRequest.FilterUrlExtensions, Sitecore.Kernel">

<param desc="Allowed extensions (comma separated)">aspx, ashx, asmx, txt</param>

</processor>

</preprocessRequest>

<httpRequestBegin>

<processor type="Business.Services.Robots.RobotsProcessor, Business.Services"

patch:before="processor[@type='Sitecore.Pipelines.HttpRequest.UserResolver, Sitecore.Kernel']"/>

</httpRequestBegin>

</pipelines>

</sitecore>

</configuration>Browse the site and hit the robots.txt URL and you should be able to see the dynamically generated robots.txt text. This code also supports multisite. You need not change anything. Create a language version and add the content to it.

![Fatal: Refusing To Merge Unrelated Histories [Solved] 9 Fatal: Refusing To Merge Unrelated Histories](https://itsmycode.com/wp-content/uploads/2021/08/git-fatal-refusing-to-merge-unrelated-histories.png)